BoxCoxFactory¶

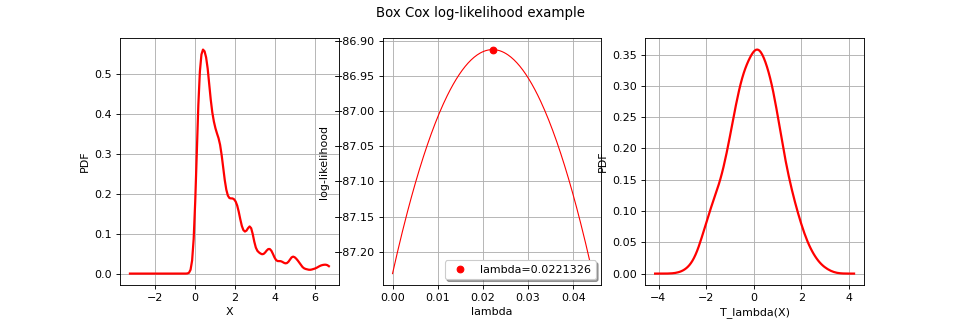

(Source code, png)

- class BoxCoxFactory(*args)¶

BoxCox transformation estimator.

Notes

The class

BoxCoxFactoryenables to build a Box Cox transformation from data.The Box Cox transformation

maps a sample into a new sample following a normal distribution with independent components. That sample may be the realization of a process as well as the realization of a distribution.

In the multivariate case, we proceed component by component:

which writes:

for all

.

BoxCox transformation could alse be performed in the case of the estimation of a general linear model through

GeneralLinearModelAlgorithm. The objective is to estimate the most likely surrogate model (general linear model) which links input dataand

.

are to be calibrated such as maximizing the general linear model’s likelihood function. In that context, a

CovarianceModeland aBasishave to be fixedMethods

build(*args)Estimate the Box Cox transformation.

buildWithGLM(*args)Estimate the Box Cox transformation with general linear model.

buildWithGraph(*args)Estimate the Box Cox transformation with graph output.

buildWithLM(*args)Estimate the Box Cox transformation with linear model.

Accessor to the object's name.

getId()Accessor to the object's id.

getName()Accessor to the object's name.

Accessor to the solver.

Accessor to the object's shadowed id.

Accessor to the object's visibility state.

hasName()Test if the object is named.

Test if the object has a distinguishable name.

setName(name)Accessor to the object's name.

setOptimizationAlgorithm(solver)Accessor to the solver.

setShadowedId(id)Accessor to the object's shadowed id.

setVisibility(visible)Accessor to the object's visibility state.

- __init__(*args)¶

- build(*args)¶

Estimate the Box Cox transformation.

- Parameters:

- Returns:

- transform

BoxCoxTransform The estimated Box Cox transformation.

- transform

Notes

We describe the estimation in the univariate case, in the case of no surrogate model estimate. Only the parameter

is estimated. To clarify the notations, we omit the mention of

in

.

We note

a sample of

. We suppose that

.

The parameters

are estimated by the maximum likelihood estimators. We note

and

respectively the cumulative distribution function and the density probability function of the

distribution.

We have :

from which we derive the density probability function p of

:

which enables to write the likelihood of the values

:

We notice that for each fixed

, the likelihood equation is proportional to the likelihood equation which estimates

.

Thus, the maximum likelihood estimators for

for a given

are :

Substituting these expressions in the likelihood equation and taking the

likelihood leads to:

The parameter

is the one maximising

.

In the case of surrogate model estimate, we note

the input sample of

,

the input sample of

. We suppose the general linear model link

with

:

is a functional basis with

for all i,

are the coefficients of the linear combination and

is a zero-mean gaussian process with a stationary covariance function

Thus implies that

.

The likelihood function to be maximized writes as follows:

where

is the matrix resulted from the discretization of the covariance model over

. The parameter

is the one maximising

.

Examples

Estimate the Box Cox transformation from a sample:

>>> import openturns as ot >>> sample = ot.Exponential(2).getSample(10) >>> factory = ot.BoxCoxFactory() >>> transform = factory.build(sample) >>> estimatedLambda = transform.getLambda()

Estimate the Box Cox transformation from a field:

>>> indices = [10, 5] >>> mesher = ot.IntervalMesher(indices) >>> interval = ot.Interval([0.0, 0.0], [2.0, 1.0]) >>> mesh = mesher.build(interval) >>> amplitude = [1.0] >>> scale = [0.2, 0.2] >>> covModel = ot.ExponentialModel(scale, amplitude) >>> Xproc = ot.GaussianProcess(covModel, mesh) >>> g = ot.SymbolicFunction(['x1'], ['exp(x1)']) >>> dynTransform = ot.ValueFunction(g, mesh) >>> XtProcess = ot.CompositeProcess(dynTransform, Xproc)

>>> field = XtProcess.getRealization() >>> transform = ot.BoxCoxFactory().build(field)

- buildWithGLM(*args)¶

Estimate the Box Cox transformation with general linear model.

Refer to

build()for details.- Parameters:

- inputSample, outputSample

Sampleor 2d-array The input and output samples of a model evaluated apart.

- covarianceModel

CovarianceModel Covariance model. Should have input dimension equal to input sample’s dimension and dimension equal to output sample’s dimension. See note for some particular applications.

- basis

Basis, optional Functional basis to estimate the trend:

. If

, the same basis is used for each marginal output.

- shift

Point It ensures that when shifted, the data are all positive. By default the opposite of the min vector of the data is used if some data are negative.

- inputSample, outputSample

- Returns:

- transform

BoxCoxTransform The estimated Box Cox transformation.

- generalLinearModelResult

GeneralLinearModelResult The structure that contains results of general linear model algorithm.

- transform

Examples

Estimation of a general linear model:

>>> import openturns as ot >>> ot.RandomGenerator.SetSeed(0) >>> inputSample = ot.Uniform(-1.0, 1.0).getSample(20) >>> outputSample = ot.Sample(inputSample) >>> # Evaluation of y = ax + b (a: scale, b: translate) >>> outputSample = outputSample * [3] + [3.1] >>> # inverse transfo + small noise >>> def f(x): import math; return [math.exp(x[0])] >>> inv_transfo = ot.PythonFunction(1, 1, f) >>> outputSample = inv_transfo(outputSample) + ot.Normal(0, 1.0e-2).getSample(20) >>> # Estimation >>> basis = ot.LinearBasisFactory(1).build() >>> covarianceModel = ot.DiracCovarianceModel() >>> shift = [1.0e-1] >>> boxCox, result = ot.BoxCoxFactory().buildWithGLM(inputSample, outputSample, covarianceModel, basis, shift)

- buildWithGraph(*args)¶

Estimate the Box Cox transformation with graph output.

- Parameters:

- Returns:

- transform

BoxCoxTransform The estimated Box Cox transformation.

- graph

Graph The graph plots the evolution of the likelihood with respect to the value of

for each component i. It enables to graphically detect the optimal values.

- transform

- buildWithLM(*args)¶

Estimate the Box Cox transformation with linear model.

Refer to

build()for details.- Parameters:

- inputSample, outputSample

Sampleor 2d-array The input and output samples of a model evaluated apart.

- covarianceModel

CovarianceModel Covariance model. Should have input dimension equal to input sample’s dimension and dimension equal to output sample’s dimension. See note for some particular applications.

- basis

Basis, optional Functional basis to estimate the trend:

. If

, the same basis is used for each marginal output.

- shift

Point It ensures that when shifted, the data are all positive. By default the opposite of the min vector of the data is used if some data are negative.

- inputSample, outputSample

- Returns:

- transform

BoxCoxTransform The estimated Box Cox transformation.

- linearModelResult

LinearModelResult The structure that contains results of linear model algorithm.

- transform

Examples

Estimation of a linear model:

>>> import openturns as ot >>> ot.RandomGenerator.SetSeed(0) >>> x = ot.Uniform(-1.0, 1.0).getSample(20) >>> y = ot.Sample(x) >>> # Evaluation of y = ax + b (a: scale, b: translate) >>> y = y * [3] + [3.1] >>> # inverse transformation >>> inv_transformation = ot.SymbolicFunction('x', 'exp(x)') >>> y = inv_transformation(y) + ot.Normal(0, 1.0e-4).getSample(20) >>> # Estimation >>> shift = [1.0e-1] >>> boxCox, result = ot.BoxCoxFactory().buildWithLM(x, y, shift)

- getClassName()¶

Accessor to the object’s name.

- Returns:

- class_namestr

The object class name (object.__class__.__name__).

- getId()¶

Accessor to the object’s id.

- Returns:

- idint

Internal unique identifier.

- getName()¶

Accessor to the object’s name.

- Returns:

- namestr

The name of the object.

- getOptimizationAlgorithm()¶

Accessor to the solver.

- Returns:

- solver

OptimizationAlgorithm The solver used for numerical optimization.

- solver

- getShadowedId()¶

Accessor to the object’s shadowed id.

- Returns:

- idint

Internal unique identifier.

- getVisibility()¶

Accessor to the object’s visibility state.

- Returns:

- visiblebool

Visibility flag.

- hasName()¶

Test if the object is named.

- Returns:

- hasNamebool

True if the name is not empty.

- hasVisibleName()¶

Test if the object has a distinguishable name.

- Returns:

- hasVisibleNamebool

True if the name is not empty and not the default one.

- setName(name)¶

Accessor to the object’s name.

- Parameters:

- namestr

The name of the object.

- setOptimizationAlgorithm(solver)¶

Accessor to the solver.

- Parameters:

- solver

OptimizationAlgorithm The solver used for numerical optimization.

- solver

- setShadowedId(id)¶

Accessor to the object’s shadowed id.

- Parameters:

- idint

Internal unique identifier.

- setVisibility(visible)¶

Accessor to the object’s visibility state.

- Parameters:

- visiblebool

Visibility flag.

OpenTURNS

OpenTURNS