Conditional distributions¶

The library offers some modelisation capacities on conditional distributions:

Case 1: Create a joint distribution using conditioning,

Case 2: Condition a joint distribution by some values of its marginals,

Case 3: Create a distribution whose parameters are random,

Case 4: Create a Bayesian posterior distribution.

Case 1: Create a joint distribution using conditioning¶

The objective is to create the joint distribution of the random vector

where

follows the distribution

and

follows the distribution

where

with

a link function of input dimension

the dimension of

and output dimension the dimension of

.

This distribution is limited to the continuous case, ie when both the conditioning and the conditioned distributions are continuous. Its probability density function is defined as:

with the PDF of the distribution of

where

has been replaced by

,

the PDF of

.

See the class JointByConditioningDistribution.

Case 2: Condition a joint distribution to some values of its marginals¶

Let be a random vector of dimension

. Let

be a set of indices of components of

,

its complementary in

and

a real vector of dimension equal to the cardinal of

.

The objective is to create the distribution of:

See the class PointConditionalDistribution.

This class requires the following features:

each component

is continuous or discrete: e.g., it can not be a

Mixtureof discrete and continuous distributions,the copula of

is continuous: e.g., it can not be the

MinCopula,the random vector

is continuous or discrete: all its components must be discrete or all its components must be continuous,

the random vector

may have some discrete components and some continuous components.

Then, the pdf (probability density function if is continuous or probability distribution function if

is discrete) of

is defined by (in the following

expression, we assumed a particular order of the conditioned components among the whole set of components for easy reading):

(1)¶

where:

with:

is the probability density copula of

,

if

is a continuous component,

is its probability density function,

if

is a discrete component,

where

is its support and

the Dirac distribution centered on

.

Then, if is continuous, we have:

and if is discrete with its support denoted by

, we have:

Simplification mechanisms to compute (1) are implemented for some distributions. We detail some cases where a simplification has been implemented.

Elliptical distributions: This is the case for normal and Student distributions. If follows a normal or a Student distribution,

then

respectively follows a normal or a Student distribution with modified parameters.

See Conditional Normal and

Conditional Student for the formulas of the conditional distributions.

Mixture distributions Let be a random vector of dimension

which distribution is defined by a

Mixture of discrete or continuous atoms. Let denote by

the PDF (Probability Density

Function for continuous atoms and Probability Distribution Function for discrete one) of each atom, with respective weights

.

Then we get:

We denote by the PDF of the

-th atom conditioned by

. Then, if

, we get:

which finally leads to:

(2)¶

where with

.

The constant

normalizes the weights so that

.

Noting that is the PDF of the

-th atom

conditioned by

, we show that the random vector

is the Mixture built from the

-conditioned atoms with weights

.

Conclusion: The conditional distribution of a Mixture is a Mixture of conditional distributions.

Kernel Mixture distributions: The Kernel Mixture distribution is a particular Mixture: all the weights are identical and

all the kernels of the combination are of the same

discrete or continuous family. The kernels are centered on the sample points. The multivariate kernel

is a tensorized product of the same univariate kernel.

Let be a random vector of dimension

defined by a Kernel Mixture distribution based on the sample

and the kernel

. In the continuous case,

is the kernel PDF and we have:

where is the kernel normalized by the bandwidth

:

Following the Mixture case, we still have the relation (2). As the multivariate kernel is the tensorized product of the univariate kernel, we get:

Conclusion: The conditional distribution of a Kernel Mixture is a Mixture which atoms are the tensorized product of the kernel on the remaining components

and which weights

are proportional to:

as we have in (2).

Truncated distributions: Let be a random vector of dimension

which PDF is

. Let

be a domain of

and let

be the random vector

truncated to the domain

. It has the following PDF:

where . Let

be in the support of the margin

of

, denoted by

. We denote by

the conditional random vector:

The random vector is defined on the domain:

The domain as

.

Then, for all

, we have:

which is:

(3)¶

Now, we denote by the conditional random vector:

Then, we have:

Let the truncated random vector defined by:

Then, we have:

where . Noting that:

we get:

which is:

(4)¶

The equivalence of the relations (3) and (4) proves the conclusion.

Conclusion: The conditional distribution of a truncated distribution is the truncated distribution of the conditional distribution. Care: the truncation domains are not exactly the same.

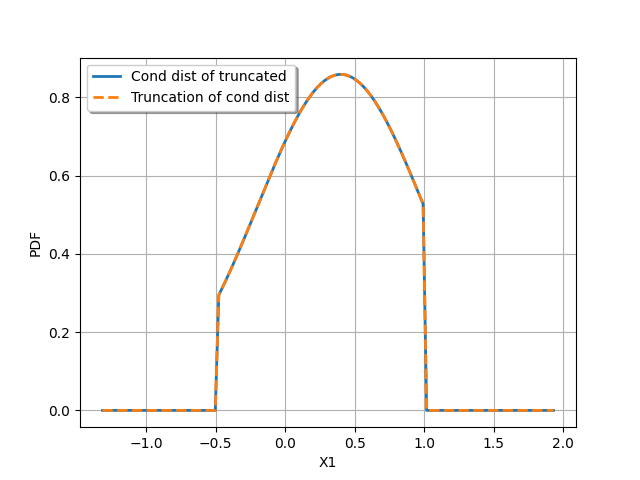

The following figure illustrates the case where with

.

We plot:

the PDF of

conditioned by

(Cond dist of truncated),

the PDF of the truncation to

of

: (Truncation of cond dist).

Note that the numerical range of the conditional distribution might be different from the range of the numerical range of the non conditioned

distribution. For example, consider a bivariate distribution following a normal distribution with zero mean, unit variance and a

correlation

. Then consider

. The numerical range of

is

where as the

numerical range of

is

. See Create a Point Conditional Distribution to get

some more examples.

The computation of the numerical range is important to make possible the integration of the PDF on some domains. The library implements 3 strategies to compute it. We detail these strategies.

Strategy None: The numerical range of is the same as the

numerical range of

. This range is exact for all distributions with bounded support. For distributions with unbounded

support,

it is potentially false when the conditional values are very close to the bounds of the initial numerical support.

Strategy Normal: Let be the Gaussian vector of dimension

, which mean vector

is defined by

and covariance matrix is defined by

.

Then, we build the conditioned Gaussian vector:

The numerical range of

is known exactly thanks to the simplification mechanism

implemented for Gaussian vectors. We assign to

the

range

:

Strategy NormalCopula: Let be the Gaussian vector of dimension

, with zero mean,

unit variance and which correlation matrix

is defined from the Spearman correlation matrix of

:

. Thus,

is the standard representant

of the normal copula having the same correlation as

.

For each conditioning value , we define the quantile

of the normal distribution with zero mean and unit

variance associated to the same order as

, for

:

where is the CDF of the normal distribution with zero mean and unit variance. Then, we build the conditioned

Gaussian vector:

which numerical range can be exactly computed.

Let it be:

Then, inversely, we compute the quantiles of each for

which have the same order as the bounds

and

with respect

:

We assign to the numerical range defined by:

Case 3: Create a distribution whose parameters are random¶

The objective is to create the marginal distribution of in Case 1.

See the class DeconditionedDistribution.

This class requires the following features:

the

may be continuous, discrete or neither: e.g., it can be a

Mixtureof discrete and continuous distributions. In that case, its parameters set is the union of the parameters set of each of its atoms (the weights of the mixture are not considered as parameters).each component

is continuous or discrete: e.g., it can not be a

Mixtureof discrete and continuous distributions, (so that the random vectormay have some discrete components and some continuous components),

the copula of

is continuous: e.g., it can not be the

MinCopula,if

has both discrete components and continuous components, its copula must be the independent copula. The general case has not been implemented yet.

We define:

where:

is the probability density copula of

,

if

is a continuous component,

is its probability density function,

if

is a discrete component,

where

is its support and

the Dirac distribution centered on

.

Then, the PDF of is defined by:

with the same convention as for .

Note that this is always possible to create the random vector whatever the distribution of

:

see the class

DeconditionedRandomVector. But remember that a DeconditionedRandomVector

(and more generally a RandomVector) can only be sampled.

Case 4: Create a Bayesian posterior distribution¶

Consider the random vector

where

follows the distribution

,

with

and

following the prior distribution

. The function

is a link function which input dimension

is the dimension of

and which output dimension the dimension of

.

The objective is to create the posterior distribution of given that we have a sample

of

.

See the class PosteriorDistribution.

This class requires the following features:

the

may be continuous, discrete or neither: e.g., it can be a

Mixtureof discrete and continuous distributions. In that case, its parameters set is the union of the parameters set of each of its atoms (the weights of the mixture are not considered as parameters).each component

is continuous or discrete: e.g., it can not be a

Mixtureof discrete and continuous distributions, (the random vectormay have some discrete components and some continuous components),

the copula of

is continuous: e.g., it can not be the

MinCopula.

If and

are continuous random vector, then the posterior PDF of

is

defined by:

(5)¶

with the PDF of the distribution of

where

has been replaced by

and

the PDF of the prior distribution

of

.

Note that the denominator of (5) is the PDF of the deconditioned distribution of with respect to the

prior distribution of

.

In the other cases, the PDF is the probability distribution function for the discrete components and the are replaced by some

.

OpenTURNS

OpenTURNS