LogNormalMuSigma¶

- class LogNormalMuSigma(*args)¶

LogNormal distribution parameters.

- Parameters:

- mufloat

The mean of the LogNormal random variable.

Default value is

.

- sigmafloat

The standard deviation of the LogNormal random variable, with

.

Default value is

.

- gammafloat, optional

Location parameter.

Default value is 0.0.

Methods

evaluate()Compute native parameters values.

Accessor to the object's name.

Get the description of the parameters.

Build a distribution based on a set of native parameters.

getName()Accessor to the object's name.

Accessor to the parameters values.

gradient()Get the gradient.

hasName()Test if the object is named.

inverse(inP)Convert to native parameters.

setName(name)Accessor to the object's name.

setValues(values)Accessor to the parameters values.

See also

Notes

Let

be a random variable that follows a LogNormal distribution such that:

The native parameters of

are

and

, which are such that

follows a normal distribution whose mean is

and whose variance is

. Then we have:

The default values of

are defined so that the associated native parameters have the default values:

.

Examples

Create the parameters of the LogNormal distribution:

>>> import openturns as ot >>> parameters = ot.LogNormalMuSigma(0.63, 3.3, -0.5)

Convert parameters into the native parameters:

>>> print(parameters.evaluate()) [-1.00492,1.50143,-0.5]

The gradient of the transformation of the native parameters into the new parameters:

>>> print(parameters.gradient()) [[ 1.67704 -0.527552 0 ] [ -0.271228 0.180647 0 ] [ -1.67704 0.527552 1 ]]

- __init__(*args)¶

- getClassName()¶

Accessor to the object’s name.

- Returns:

- class_namestr

The object class name (object.__class__.__name__).

- getDescription()¶

Get the description of the parameters.

- Returns:

- collection

Description List of parameters names.

- collection

- getDistribution()¶

Build a distribution based on a set of native parameters.

- Returns:

- distribution

Distribution Distribution built with the native parameters.

- distribution

- getName()¶

Accessor to the object’s name.

- Returns:

- namestr

The name of the object.

- gradient()¶

Get the gradient.

- Returns:

- gradient

Matrix The gradient of the transformation of the native parameters into the new parameters.

- gradient

Notes

If we note

the native parameters and

the new ones, then the gradient matrix is

.

- hasName()¶

Test if the object is named.

- Returns:

- hasNamebool

True if the name is not empty.

- inverse(inP)¶

Convert to native parameters.

- Parameters:

- inPsequence of float

The non-native parameters.

- Returns:

- outP

Point The native parameters.

- outP

- setName(name)¶

Accessor to the object’s name.

- Parameters:

- namestr

The name of the object.

- setValues(values)¶

Accessor to the parameters values.

- Parameters:

- valuessequence of float

List of parameters values.

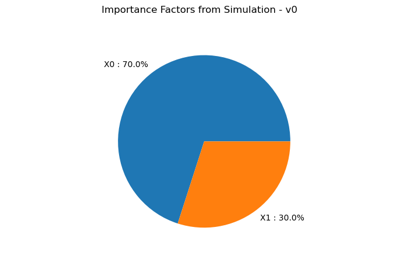

Examples using the class¶

Apply a transform or inverse transform on your polynomial chaos

OpenTURNS

OpenTURNS