Note

Click here to download the full example code

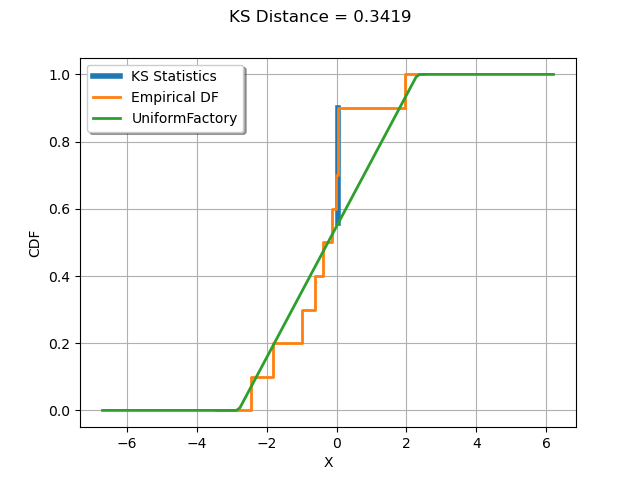

Kolmogorov-Smirnov : understand the statistics¶

In this example, we illustrate how the Kolmogorov-Smirnov statistic is computed.

We generate a sample from a normal distribution.

We create a uniform distribution and estimate its parameters from the sample.

Compute the Kolmogorov-Smirnov statistic and plot it on top of the empirical cumulated distribution function.

import openturns as ot

import openturns.viewer as viewer

from matplotlib import pylab as plt

ot.Log.Show(ot.Log.NONE)

The computeKSStatisticsIndex() function computes the Kolmogorov-Smirnov distance between the sample and the distribution. Furthermore, it returns the index which achieves the maximum distance in the sorted sample. The following function is for teaching purposes only: use FittingTest for real applications.

def computeKSStatisticsIndex(sample, distribution):

sample = ot.Sample(sample.sort())

print("Sorted")

print(sample)

n = sample.getSize()

D = 0.0

index = -1

D_previous = 0.0

for i in range(n):

F = distribution.computeCDF(sample[i])

S1 = abs(F - float(i) / n)

S2 = abs(float(i + 1) / n - F)

print(

"i=%d, x[i]=%.4f, F(x[i])=%.4f, S1=%.4f, S2=%.4f"

% (i, sample[i, 0], F, S1, S2)

)

D = max(S1, S2, D)

if D > D_previous:

print("D max!")

index = i

D_previous = D

observation = sample[index]

return D, index, observation

The drawKSDistance() function plots the empirical distribution function of the sample and the Kolmogorov-Smirnov distance at point x. The empirical CDF is a staircase function and is discontinuous at each observation. Denote by the empirical CDF. For a given observation

which achieves the maximum distance to the candidate distribution CDF, let us denote

and

. The maximum distance can be achieved either by

or

. The computeEmpiricalCDF(x) method computes

. We compute

with the equation

where

is the sample size.

def drawKSDistance(sample, distribution, observation, D, distFactory):

graph = ot.Graph("KS Distance = %.4f" % (D), "X", "CDF", True, "topleft")

# Thick vertical line at point x

ECDF_x_plus = sample.computeEmpiricalCDF(observation)

ECDF_x_minus = ECDF_x_plus - 1.0 / sample.getSize()

CDF_index = distribution.computeCDF(observation)

curve = ot.Curve(

[observation[0], observation[0], observation[0]],

[ECDF_x_plus, ECDF_x_minus, CDF_index],

)

curve.setLegend("KS Statistics")

curve.setLineWidth(4.0 * curve.getLineWidth())

graph.add(curve)

# Empirical CDF

empiricalCDF = ot.UserDefined(sample).drawCDF()

empiricalCDF.setLegends(["Empirical DF"])

graph.add(empiricalCDF)

#

distname = distFactory.getClassName()

distribution = distFactory.build(sample)

cdf = distribution.drawCDF()

cdf.setLegends([distname])

graph.add(cdf)

graph.setColors(ot.Drawable.BuildDefaultPalette(3))

return graph

We generate a sample from a standard normal distribution.

N = ot.Normal()

n = 10

sample = N.getSample(n)

Compute the index which achieves the maximum Kolmogorov-Smirnov distance.

We then create a uniform distribution whose parameters are estimated from the sample. This way, the K.S. distance is large enough to be graphically significant.

distFactory = ot.UniformFactory()

distribution = distFactory.build(sample)

distribution

Compute the index which achieves the maximum Kolmogorov-Smirnov distance.

D, index, observation = computeKSStatisticsIndex(sample, distribution)

print("D=", D, ", Index=", index, ", Obs.=", observation)

Out:

Sorted

0 : [ -2.44405 ]

1 : [ -1.83267 ]

2 : [ -0.984511 ]

3 : [ -0.628132 ]

4 : [ -0.404311 ]

5 : [ -0.1196 ]

6 : [ -0.0273712 ]

7 : [ 0.0259192 ]

8 : [ 0.0503964 ]

9 : [ 1.94903 ]

i=0, x[i]=-2.4441, F(x[i])=0.0714, S1=0.0714, S2=0.0286

D max!

i=1, x[i]=-1.8327, F(x[i])=0.1907, S1=0.0907, S2=0.0093

D max!

i=2, x[i]=-0.9845, F(x[i])=0.3562, S1=0.1562, S2=0.0562

D max!

i=3, x[i]=-0.6281, F(x[i])=0.4257, S1=0.1257, S2=0.0257

i=4, x[i]=-0.4043, F(x[i])=0.4694, S1=0.0694, S2=0.0306

i=5, x[i]=-0.1196, F(x[i])=0.5250, S1=0.0250, S2=0.0750

i=6, x[i]=-0.0274, F(x[i])=0.5430, S1=0.0570, S2=0.1570

D max!

i=7, x[i]=0.0259, F(x[i])=0.5533, S1=0.1467, S2=0.2467

D max!

i=8, x[i]=0.0504, F(x[i])=0.5581, S1=0.2419, S2=0.3419

D max!

i=9, x[i]=1.9490, F(x[i])=0.9286, S1=0.0286, S2=0.0714

D= 0.3418753236663964 , Index= 8 , Obs.= [0.0503964]

graph = drawKSDistance(sample, distribution, observation, D, distFactory)

view = viewer.View(graph)

plt.show()

We see that the K.S. statistics is achieved at the observation where the distance between the empirical distribution function of the sample and the candidate distribution is largest.

Total running time of the script: ( 0 minutes 0.081 seconds)

OpenTURNS

OpenTURNS