NLopt¶

- class NLopt(*args)¶

Interface to NLopt.

This class exposes the solvers from the non-linear optimization library [nlopt2009].

More details about available algorithms are available here.

- Parameters:

- problem

OptimizationProblem Optimization problem to solve.

- algoNamestr

The NLopt identifier of the algorithm. Use

GetAlgorithmNames()to list available names.

- problem

See also

AbdoRackwitz,Cobyla,SQP,TNC

Notes

Here are some properties of the different algorithms:

Algorithm

Derivative info

Constraint support

AUGLAG

no derivative

all

AUGLAG_EQ

no derivative

all

GD_MLSL

first derivative

bounds required

GD_MLSL_LDS

first derivative

bounds required

GD_STOGO (optional)

first derivative

bounds required

GD_STOGO_RAND (optional)

first derivative

bounds required

GN_AGS (optional)

no derivative

bounds required, inequality

GN_CRS2_LM

no derivative

bounds required

GN_DIRECT

no derivative

bounds required

GN_DIRECT_L

no derivative

bounds required

GN_DIRECT_L_NOSCAL

no derivative

bounds required

GN_DIRECT_L_RAND

no derivative

bounds required

GN_DIRECT_L_RAND_NOSCAL

no derivative

bounds required

GN_ESCH

no derivative

bounds required

GN_ISRES

no derivative

bounds required, all

GN_MLSL

no derivative

bounds required

GN_MLSL_LDS

no derivative

bounds required

GN_ORIG_DIRECT

no derivative

bounds required, inequality

GN_ORIG_DIRECT_L

no derivative

bounds required, inequality

G_MLSL

no derivative

bounds required

G_MLSL_LDS

no derivative

bounds required

LD_AUGLAG

first derivative

all

LD_AUGLAG_EQ

first derivative

all

LD_CCSAQ

first derivative

bounds, inequality

LD_LBFGS

first derivative

bounds

LD_MMA

first derivative

bounds, inequality

LD_SLSQP

first derivative

all

LD_TNEWTON

first derivative

bounds

LD_TNEWTON_PRECOND

first derivative

bounds

LD_TNEWTON_PRECOND_RESTART

first derivative

bounds

LD_TNEWTON_RESTART

first derivative

bounds

LD_VAR1

first derivative

bounds

LD_VAR2

first derivative

bounds

LN_AUGLAG

no derivative

all

LN_AUGLAG_EQ

no derivative

all

LN_BOBYQA

no derivative

bounds

LN_COBYLA

no derivative

all

LN_NELDERMEAD

no derivative

bounds

LN_NEWUOA

no derivative

bounds

LN_NEWUOA_BOUND

no derivative

bounds

LN_PRAXIS

no derivative

bounds

LN_SBPLX

no derivative

bounds

Availability of algorithms marked as optional may vary depending on the NLopt version or compilation options used.

Examples

>>> import openturns as ot >>> dim = 4 >>> bounds = ot.Interval([-3.0] * dim, [5.0] * dim) >>> linear = ot.SymbolicFunction(['x1', 'x2', 'x3', 'x4'], ['x1+2*x2-3*x3+4*x4']) >>> problem = ot.OptimizationProblem(linear, ot.Function(), ot.Function(), bounds) >>> print(ot.NLopt.GetAlgorithmNames()) [AUGLAG,AUGLAG_EQ,GD_MLSL,GD_MLSL_LDS,... >>> algo = ot.NLopt(problem, 'LD_MMA') >>> algo.setStartingPoint([0.0] * 4) >>> algo.run() >>> result = algo.getResult() >>> x_star = result.getOptimalPoint() >>> y_star = result.getOptimalValue()

Methods

Accessor to the list of algorithms provided by NLopt, by names.

SetSeed(seed)Initialize the random generator seed.

Accessor to the algorithm name.

Accessor to the object's name.

getId()Accessor to the object's id.

Initial local derivative-free algorithms step accessor.

Local solver accessor.

Accessor to maximum allowed absolute error.

Accessor to maximum allowed constraint error.

Accessor to maximum allowed number of evaluations.

Accessor to maximum allowed number of iterations.

Accessor to maximum allowed relative error.

Accessor to maximum allowed residual error.

getName()Accessor to the object's name.

Accessor to optimization problem.

Accessor to optimization result.

Accessor to the object's shadowed id.

Accessor to starting point.

Accessor to the verbosity flag.

Accessor to the object's visibility state.

hasName()Test if the object is named.

Test if the object has a distinguishable name.

run()Launch the optimization.

setAlgorithmName(algoName)Accessor to the algorithm name.

setInitialStep(initialStep)Initial local derivative-free algorithms step accessor.

setLocalSolver(localSolver)Local solver accessor.

setMaximumAbsoluteError(maximumAbsoluteError)Accessor to maximum allowed absolute error.

setMaximumConstraintError(maximumConstraintError)Accessor to maximum allowed constraint error.

Accessor to maximum allowed number of evaluations.

setMaximumIterationNumber(maximumIterationNumber)Accessor to maximum allowed number of iterations.

setMaximumRelativeError(maximumRelativeError)Accessor to maximum allowed relative error.

setMaximumResidualError(maximumResidualError)Accessor to maximum allowed residual error.

setName(name)Accessor to the object's name.

setProblem(problem)Accessor to optimization problem.

setProgressCallback(*args)Set up a progress callback.

setResult(result)Accessor to optimization result.

setShadowedId(id)Accessor to the object's shadowed id.

setStartingPoint(startingPoint)Accessor to starting point.

setStopCallback(*args)Set up a stop callback.

setVerbose(verbose)Accessor to the verbosity flag.

setVisibility(visible)Accessor to the object's visibility state.

- __init__(*args)¶

- static GetAlgorithmNames()¶

Accessor to the list of algorithms provided by NLopt, by names.

- Returns:

- names

Description List of algorithm names provided by NLopt, according to its naming convention.

- names

Examples

>>> import openturns as ot >>> print(ot.NLopt.GetAlgorithmNames()) [AUGLAG,AUGLAG_EQ,GD_MLSL,...

- static SetSeed(seed)¶

Initialize the random generator seed.

- Parameters:

- seedint

The RNG seed.

- getAlgorithmName()¶

Accessor to the algorithm name.

- Returns:

- algoNamestr

The NLopt identifier of the algorithm.

- getClassName()¶

Accessor to the object’s name.

- Returns:

- class_namestr

The object class name (object.__class__.__name__).

- getId()¶

Accessor to the object’s id.

- Returns:

- idint

Internal unique identifier.

- getInitialStep()¶

Initial local derivative-free algorithms step accessor.

- Returns:

- dx

Point The initial step.

- dx

- getMaximumAbsoluteError()¶

Accessor to maximum allowed absolute error.

- Returns:

- maximumAbsoluteErrorfloat

Maximum allowed absolute error, where the absolute error is defined by

where

and

are two consecutive approximations of the optimum.

- getMaximumConstraintError()¶

Accessor to maximum allowed constraint error.

- Returns:

- maximumConstraintErrorfloat

Maximum allowed constraint error, where the constraint error is defined by

where

is the current approximation of the optimum and

is the function that gathers all the equality and inequality constraints (violated values only)

- getMaximumEvaluationNumber()¶

Accessor to maximum allowed number of evaluations.

- Returns:

- Nint

Maximum allowed number of evaluations.

- getMaximumIterationNumber()¶

Accessor to maximum allowed number of iterations.

- Returns:

- Nint

Maximum allowed number of iterations.

- getMaximumRelativeError()¶

Accessor to maximum allowed relative error.

- Returns:

- maximumRelativeErrorfloat

Maximum allowed relative error, where the relative error is defined by

if

, else

.

- getMaximumResidualError()¶

Accessor to maximum allowed residual error.

- Returns:

- maximumResidualErrorfloat

Maximum allowed residual error, where the residual error is defined by

if

, else

.

- getName()¶

Accessor to the object’s name.

- Returns:

- namestr

The name of the object.

- getProblem()¶

Accessor to optimization problem.

- Returns:

- problem

OptimizationProblem Optimization problem.

- problem

- getResult()¶

Accessor to optimization result.

- Returns:

- result

OptimizationResult Result class.

- result

- getShadowedId()¶

Accessor to the object’s shadowed id.

- Returns:

- idint

Internal unique identifier.

- getVerbose()¶

Accessor to the verbosity flag.

- Returns:

- verbosebool

Verbosity flag state.

- getVisibility()¶

Accessor to the object’s visibility state.

- Returns:

- visiblebool

Visibility flag.

- hasName()¶

Test if the object is named.

- Returns:

- hasNamebool

True if the name is not empty.

- hasVisibleName()¶

Test if the object has a distinguishable name.

- Returns:

- hasVisibleNamebool

True if the name is not empty and not the default one.

- run()¶

Launch the optimization.

- setAlgorithmName(algoName)¶

Accessor to the algorithm name.

- Parameters:

- algoNamestr

The NLopt identifier of the algorithm.

- setInitialStep(initialStep)¶

Initial local derivative-free algorithms step accessor.

- Parameters:

- dxsequence of float

The initial step.

- setMaximumAbsoluteError(maximumAbsoluteError)¶

Accessor to maximum allowed absolute error.

- Parameters:

- maximumAbsoluteErrorfloat

Maximum allowed absolute error, where the absolute error is defined by

where

and

are two consecutive approximations of the optimum.

- setMaximumConstraintError(maximumConstraintError)¶

Accessor to maximum allowed constraint error.

- Parameters:

- maximumConstraintErrorfloat

Maximum allowed constraint error, where the constraint error is defined by

where

is the current approximation of the optimum and

is the function that gathers all the equality and inequality constraints (violated values only)

- setMaximumEvaluationNumber(maximumEvaluationNumber)¶

Accessor to maximum allowed number of evaluations.

- Parameters:

- Nint

Maximum allowed number of evaluations.

- setMaximumIterationNumber(maximumIterationNumber)¶

Accessor to maximum allowed number of iterations.

- Parameters:

- Nint

Maximum allowed number of iterations.

- setMaximumRelativeError(maximumRelativeError)¶

Accessor to maximum allowed relative error.

- Parameters:

- maximumRelativeErrorfloat

Maximum allowed relative error, where the relative error is defined by

if

, else

.

- setMaximumResidualError(maximumResidualError)¶

Accessor to maximum allowed residual error.

- Parameters:

- Maximum allowed residual error, where the residual error is defined by

- :math:`epsilon^r_n=frac{|f(vect{x}_{n+1})-f(vect{x}_{n})|}{|f(vect{x}_{n+1})|}`

- if :math:`|f(vect{x}_{n+1})|neq 0`, else :math:`epsilon^r_n=-1`.

- setName(name)¶

Accessor to the object’s name.

- Parameters:

- namestr

The name of the object.

- setProblem(problem)¶

Accessor to optimization problem.

- Parameters:

- problem

OptimizationProblem Optimization problem.

- problem

- setProgressCallback(*args)¶

Set up a progress callback.

Can be used to programmatically report the progress of an optimization.

- Parameters:

- callbackcallable

Takes a float as argument as percentage of progress.

Examples

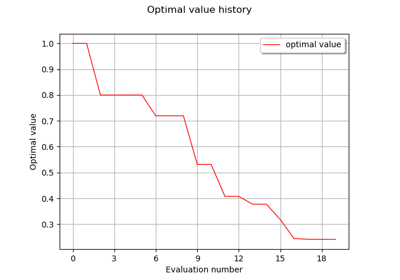

>>> import sys >>> import openturns as ot >>> rosenbrock = ot.SymbolicFunction(['x1', 'x2'], ['(1-x1)^2+100*(x2-x1^2)^2']) >>> problem = ot.OptimizationProblem(rosenbrock) >>> solver = ot.OptimizationAlgorithm(problem) >>> solver.setStartingPoint([0, 0]) >>> solver.setMaximumResidualError(1.e-3) >>> solver.setMaximumEvaluationNumber(10000) >>> def report_progress(progress): ... sys.stderr.write('-- progress=' + str(progress) + '%\n') >>> solver.setProgressCallback(report_progress) >>> solver.run()

- setResult(result)¶

Accessor to optimization result.

- Parameters:

- result

OptimizationResult Result class.

- result

- setShadowedId(id)¶

Accessor to the object’s shadowed id.

- Parameters:

- idint

Internal unique identifier.

- setStartingPoint(startingPoint)¶

Accessor to starting point.

- Parameters:

- startingPoint

Point Starting point.

- startingPoint

- setStopCallback(*args)¶

Set up a stop callback.

Can be used to programmatically stop an optimization.

- Parameters:

- callbackcallable

Returns an int deciding whether to stop or continue.

Examples

>>> import openturns as ot >>> rosenbrock = ot.SymbolicFunction(['x1', 'x2'], ['(1-x1)^2+100*(x2-x1^2)^2']) >>> problem = ot.OptimizationProblem(rosenbrock) >>> solver = ot.OptimizationAlgorithm(problem) >>> solver.setStartingPoint([0, 0]) >>> solver.setMaximumResidualError(1.e-3) >>> solver.setMaximumEvaluationNumber(10000) >>> def ask_stop(): ... return True >>> solver.setStopCallback(ask_stop) >>> solver.run()

- setVerbose(verbose)¶

Accessor to the verbosity flag.

- Parameters:

- verbosebool

Verbosity flag state.

- setVisibility(visible)¶

Accessor to the object’s visibility state.

- Parameters:

- visiblebool

Visibility flag.

OpenTURNS

OpenTURNS