GeneralizedExtremeValueFactory¶

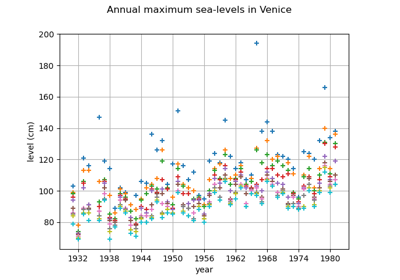

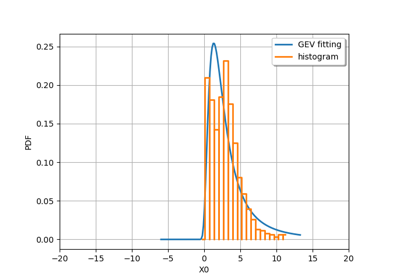

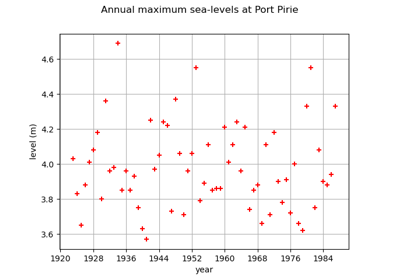

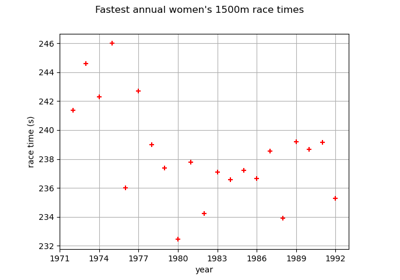

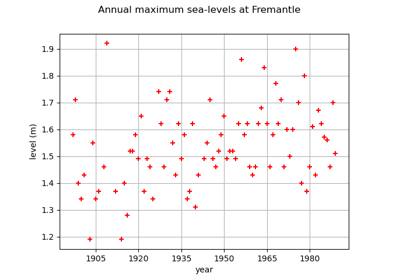

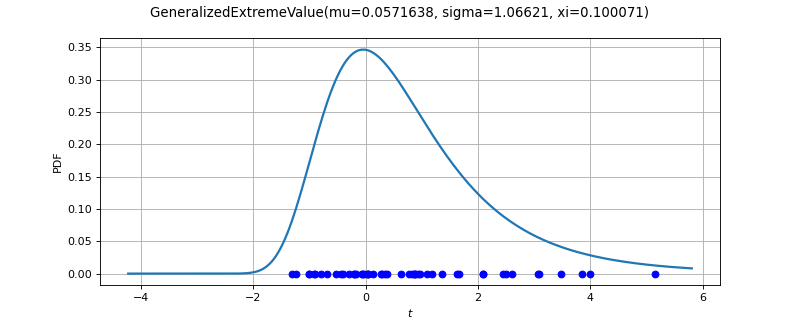

(Source code, png)

- class GeneralizedExtremeValueFactory(*args)¶

GeneralizedExtremeValue factory.

Methods

build(*args)Estimate the distribution via maximum likelihood.

Estimate the distribution as native distribution.

buildCovariates(*args)Estimate a GEV from covariates.

buildEstimator(*args)Build the distribution and the parameter distribution.

Estimate the distribution from the

largest order statistics.

Estimate the distribution and the parameter distribution with the R-maxima method.

buildMethodOfXiProfileLikelihood(sample[, r])Estimate the distribution with the profile likelihood.

Estimate the distribution and the parameter distribution with the profile likelihood.

buildReturnLevelEstimator(result, m)Estimate a return level and its distribution from the GEV parameters.

buildReturnLevelProfileLikelihood(sample, m)Estimate a return level and its distribution with the profile likelihood.

Estimate

and its distribution with the profile likelihood.

buildTimeVarying(*args)Estimate a non stationary GEV from a time-dependent parametric model.

Accessor to the bootstrap size.

Accessor to the object's name.

Accessor to the known parameters indices.

Accessor to the known parameters values.

getName()Accessor to the object's name.

Accessor to the solver.

hasName()Test if the object is named.

setBootstrapSize(bootstrapSize)Accessor to the bootstrap size.

setKnownParameter(values, positions)Accessor to the known parameters.

setName(name)Accessor to the object's name.

setOptimizationAlgorithm(solver)Accessor to the solver.

See also

Notes

Several estimators to build a GeneralizedExtremeValueFactory distribution from a scalar sample are proposed. The details are given in the methods documentation.

The following

ResourceMapentries can be used to tweak the parameters of the optimization solver involved in the different estimators:GeneralizedExtremeValueFactory-DefaultOptimizationAlgorithm

GeneralizedExtremeValueFactory-MaximumCallsNumber

GeneralizedExtremeValueFactory-MaximumAbsoluteError

GeneralizedExtremeValueFactory-MaximumRelativeError

GeneralizedExtremeValueFactory-MaximumObjectiveError

GeneralizedExtremeValueFactory-MaximumConstraintError

GeneralizedExtremeValueFactory-InitializationMethod

GeneralizedExtremeValueFactory-NormalizationMethod

- __init__(*args)¶

- build(*args)¶

Estimate the distribution via maximum likelihood.

Available usages:

build(sample)

build(param)

- Parameters:

- sample2-d sequence of float

The block maxima sample of dimension 1 from which

are estimated.

- paramsequence of float

The parameters of the

GeneralizedExtremeValue.

- Returns:

- distribution

GeneralizedExtremeValue The estimated distribution.

- distribution

Notes

The estimation strategy described in

buildAsGeneralizedExtremeValue()is followed.

- buildAsGeneralizedExtremeValue(*args)¶

Estimate the distribution as native distribution.

Available usages:

buildAsGeneralizedExtremeValue()

buildAsGeneralizedExtremeValue(sample)

buildAsGeneralizedExtremeValue(param)

- Parameters:

- sample2-d sequence of float

The block maxima sample of dimension 1 from which

are estimated.

- paramsequence of float

The parameters of the

GeneralizedExtremeValue.

- Returns:

- distribution

GeneralizedExtremeValue The estimated distribution as a GeneralizedExtremeValue.

In the first usage, the default GeneralizedExtremeValue distribution is built.

- distribution

Notes

The estimate maximizes the log-likelihood of the model.

- buildCovariates(*args)¶

Estimate a GEV from covariates.

- Parameters:

- sample2-d sequence of float

The block maxima grouped in a sample of size

and one dimension.

- covariates2-d sequence of float

Covariates sample. A constant column is automatically added if it is not provided.

- muIndicessequence of int, optional

Indices of covariates considered for parameter

.

By default, an empty sequence.

The index of the constant covariate is added if empty or if the covariates do not initially contain a constant column.

- sigmaIndicessequence of int, optional

Indices of covariates considered for parameter

.

By default, an empty sequence.

The index of the constant covariate is added if empty or if the covariates do not initially contain a constant column.

- xiIndicessequence of int, optional

Indices of covariates considered for parameter

.

By default, an empty sequence.

The index of the constant covariate is added if empty or if the covariates do not initially contain a constant column.

- muLink

Function, optional The

function.

By default, the identity function.

- sigmaLink

Function, optional The

function.

By default, the identity function.

- xiLink

Function, optional The

function.

By default, the identity function.

- initializationMethodstr, optional

The initialization method for the optimization problem: Gumbel or Static.

By default, the method Gumbel (see

ResourceMap, key GeneralizedExtremeValueFactory-InitializationMethod).- normalizationMethodstr, optional

The data normalization method: CenterReduce, MinMax or None.

By default, the method MinMax (see

ResourceMap, key GeneralizedExtremeValueFactory-NormalizationMethod).

- Returns:

- result

CovariatesResult The result class.

- result

Notes

Let

be a GEV model whose parameters depend on

covariates denoted by

:

We denote by

the values of

associated to the values of the covariates

.

For numerical reasons, it is recommended to normalize the covariates. Each covariate

has its own normalization:

and with three ways of defining

of the covariate

:

the CenterReduce method where

is the covariate mean and

is the standard deviation of the covariates;

the MinMax method where

is the min value of the covariate

and

its range. This is the default method;

the None method where

and

: in that case, data are not normalized.

Let

be the vector of parameters. Then,

depends on all the

covariates even if each component of

only depends on a subset of the covariates. We denote by

the

covariates involved in the modelling of the component

.

Each component

can be written as a function of the normalized covariates:

This relation can be written as a function of the real covariates:

where:

is usually referred to as the inverse-link function of the component

,

each

.

To allow some parameters to remain constant, i.e. independent of the covariates (this will generally be the case for the parameter

), the library systematically adds the constant covariate to the specified covariates.

The complete vector of parameters is defined by:

where

.

The estimator of

maximizes the likelihood of the model which is defined by:

where

denotes the GEV density function with parameters

and evaluated at

.

Then, if none of the

is zero, the log-likelihood is defined by:

defined on

such that

for all

.

And if any of the

is equal to 0, the log-likelihood is defined as:

The initialization of the optimization problem is crucial. Two initial points

are proposed:

the Gumbel initial point: in that case, we assume that the GEV is a stationary Gumbel distribution and we deduce

from the mean

and standard variation

of the data:

and

where

is Euler’s constant; then we take the initial point

. This is the default initial point;

the Static initial point: in that case, we assume that the GEV is stationary and

is the maximum likelihood estimate resulting from that assumption.

The result class provides:

the estimator

,

the asymptotic distribution of

,

the parameter function

,

the graphs of the parameter functions

, where all the components of

are fixed to a reference value excepted for

, for each

,

the graphs of the parameter functions

, where all the components of

are fixed to a reference value excepted for

, for each

,

the normalizing function

,

the optimal log-likelihood value

,

the GEV distribution at covariate

,

the graphs of the quantile functions of order

:

where all the components of

are fixed to a reference value excepted for

, for each

,

the graphs of the quantile functions of order

:

where all the components of

are fixed to a reference value excepted for

, for each

.

- buildEstimator(*args)¶

Build the distribution and the parameter distribution.

- Parameters:

- sample2-d sequence of float

Data.

- parameters

DistributionParameters Optional, the parametrization.

- Returns:

- resDist

DistributionFactoryResult The results.

- resDist

Notes

According to the way the native parameters of the distribution are estimated, the parameters distribution differs:

Moments method: the asymptotic parameters distribution is normal and estimated by Bootstrap on the initial data;

Maximum likelihood method with a regular model: the asymptotic parameters distribution is normal and its covariance matrix is the inverse Fisher information matrix;

Other methods: the asymptotic parameters distribution is estimated by Bootstrap on the initial data and kernel fitting (see

KernelSmoothing).

If another set of parameters is specified, the native parameters distribution is first estimated and the new distribution is determined from it:

if the native parameters distribution is normal and the transformation regular at the estimated parameters values: the asymptotic parameters distribution is normal and its covariance matrix determined from the inverse Fisher information matrix of the native parameters and the transformation;

in the other cases, the asymptotic parameters distribution is estimated by Bootstrap on the initial data and kernel fitting.

- buildMethodOfLikelihoodMaximization(sample, r=0)¶

Estimate the distribution from the

largest order statistics.

- Parameters:

- sample2-d sequence of float

Block maxima grouped in a sample of size

and dimension

.

- rint,

,

Number of largest order statistics taken into account among the

stored ones.

By default,

which means that all the maxima are used.

- Returns:

- distribution

GeneralizedExtremeValue The estimated distribution.

- distribution

Notes

The method estimates a GEV distribution parameterized by

from a given sample.

Let us suppose we have a series of independent and identically distributed variables and that data are grouped into

blocks. In each block, the largest

observations are recorded.

We define the series

for

where the values are sorted in decreasing order.

The estimator of

maximizes the log-likelihood built from the

largest order statistics, with

defined as:

If

, then:

(1)¶

defined on

such that

for all

and

.

If

, then:

(2)¶

- buildMethodOfLikelihoodMaximizationEstimator(sample, r=0)¶

Estimate the distribution and the parameter distribution with the R-maxima method.

- Parameters:

- sampleM2-d sequence of float

Block maxima grouped in a sample of size

and dimension

.

- rint,

, optional

Number of order statistics taken into account among the

stored ones.

By default,

which means that all the maxima are used.

- Returns:

- result

DistributionFactoryLikelihoodResult The result class.

- result

Notes

The method estimates a GEV distribution parameterized by

from a given sample.

The estimator

is defined using the profile log-likelihood as detailed in

buildMethodOfLikelihoodMaximization().The result class produced by the method provides:

the GEV distribution associated to

,

the asymptotic distribution of

.

- buildMethodOfXiProfileLikelihood(sample, r=0)¶

Estimate the distribution with the profile likelihood.

- Parameters:

- sample2-d sequence of float

Block maxima grouped in a sample of size

and dimension

.

- rint,

,

Number of largest order statistics taken into account among the

stored ones.

By default,

which means that all the maxima are used.

- Returns:

- distribution

GeneralizedExtremeValue The estimated distribution.

- distribution

Notes

The method estimates a GEV distribution parameterized by

from a given sample.

The estimator

is defined using a nested numerical optimization of the log-likelihood:

where

is detailed in equations (1) and (2) with

.

If

then:

The starting point of the optimization is initialized from the probability weighted moments method, see [diebolt2008].

- buildMethodOfXiProfileLikelihoodEstimator(sample, r=0)¶

Estimate the distribution and the parameter distribution with the profile likelihood.

- Parameters:

- sample2-d sequence of float

Block maxima grouped in a sample of size

and dimension

.

- rint,

,

Number of largest order statistics taken into account among the

stored ones. The block maxima sample of dimension 1 from which

are estimated.

By default,

which means that all the maxima are used.

- Returns:

- result

ProfileLikelihoodResult The result class.

- result

Notes

The method estimates a GEV distribution parameterized by

from a given sample.

The estimator

is defined in

buildMethodOfXiProfileLikelihood().The result class produced by the method provides:

the GEV distribution associated to

,

the asymptotic distribution of

,

the profile log-likelihood function

,

the optimal profile log-likelihood value

,

confidence intervals of level

of

.

- buildReturnLevelEstimator(result, m)¶

Estimate a return level and its distribution from the GEV parameters.

- Parameters:

- result

DistributionFactoryResult Likelihood estimation result of a

GeneralizedExtremeValue- mfloat

The return period expressed in terms of number of blocks.

- result

- Returns:

- distribution

Distribution The asymptotic distribution of

.

- distribution

Notes

Let

be a random variable which follows a GEV distribution parameterized by

.

The

-block return level

is the level exceeded on average once every

blocks. The

-block return level can be translated into the annual-scale: if there are

blocks per year, then the

-year return level corresponds to the

-bock return level where

.

The

-block return level is defined as the quantile of order

of the GEV distribution:

If

:

(3)¶

If

:

(4)¶

The estimator

of

is deduced from the estimator

of

.

The asymptotic distribution of

is obtained by the Delta method from the asymptotic distribution of

. It is a normal distribution with mean

and variance:

where

and

is the asymptotic covariance of

.

- buildReturnLevelProfileLikelihood(sample, m)¶

Estimate a return level and its distribution with the profile likelihood.

- Parameters:

- sample2-d sequence of float

The block maxima sample of dimension 1.

- Returns:

- distribution

Normal The asymptotic distribution of

.

- distribution

Notes

Let

be a random variable which follows a GEV distribution parameterized by

.

The

-return level

is defined in

buildReturnLevelEstimator().The estimator is defined using a nested numerical optimization of the log-likelihood:

where

is the log-likelihood detailed in (1) and (2) with

and where we substitued

for

using equations (3) or (4).

The estimator

of

is defined by:

The asymptotic distribution of

is normal.

The starting point of the optimization is initialized from the regular maximum likelihood method.

- buildReturnLevelProfileLikelihoodEstimator(sample, m)¶

Estimate

and its distribution with the profile likelihood.

- Parameters:

- sample2-d sequence of float

The block maxima sample of dimension 1.

- mfloat

The return period expressed in terms of number of blocks.

- Returns:

- result

ProfileLikelihoodResult The result class.

- result

Notes

Let

be a random variable which follows a GEV distribution parameterized by

.

The

-block return level

is defined in

buildReturnLevelEstimator(). The profile log-likelihoodis defined in

buildReturnLevelProfileLikelihood().The estimator of

is defined by:

The result class produced by the method provides:

the GEV distribution associated to

,

the asymptotic distribution of

,

the profile log-likelihood function

,

the optimal profile log-likelihood value

,

confidence intervals of level

of

.

- buildTimeVarying(*args)¶

Estimate a non stationary GEV from a time-dependent parametric model.

- Parameters:

- sample2-d sequence of float

The block maxima grouped in a sample of size

and one dimension.

- timeStamps2-d sequence of float

Values of

.

- basis

Basis Functional basis respectively for

,

and

.

- muIndicessequence of int, optional

Indices of basis terms considered for parameter

- sigmaIndicessequence of int, optional

Indices of basis terms considered for parameter

- xiIndicessequence of int, optional

Indices of basis terms considered for parameter

- muLink

Function, optional The

function.

By default, the identity function.

- sigmaLink

Function, optional The

function.

By default, the identity function.

- xiLink

Function, optional The

function.

By default, the identity function.

- initializationMethodstr, optional

The initialization method for the optimization problem: Gumbel or Static.

By default, the method Gumbel (see

ResourceMap, key GeneralizedExtremeValueFactory-InitializationMethod).- normalizationMethodstr, optional

The data normalization method: CenterReduce, MinMax or None.

By default, the method MinMax (see

ResourceMap, key GeneralizedExtremeValueFactory-NormalizationMethod).

- Returns:

- result

TimeVaryingResult The result class.

- result

Notes

Let

be a non stationary GEV distribution:

We denote by

the values of

on the time stamps

.

For numerical reasons, it is recommended to normalize the time stamps. The following mapping is applied:

and with three ways of defining

:

the CenterReduce method where

is the mean time stamps and

is the standard deviation of the time stamps;

the MinMax method where

is the first time and

the range of the time stamps. This is the default method;

the None method where

and

: in that case, data are not normalized.

If we denote by

is a component of

, then

can be written as a function of

:

where:

is the size of the functional basis involved in the modelling of

,

is usually referred to as the inverse-link function of the parameter

,

each

is a scalar function

,

each

.

We denote by

,

and

the size of the functional basis of

,

and

respectively. We denote by

the complete vector of parameters.

The estimator of

maximizes the likelihood of the non stationary model which is defined by:

where

denotes the GEV density function with parameters

evaluated at

.

Then, if none of the

is zero, the log-likelihood is defined by:

defined on

such that

for all

.

And if any of the

is equal to 0, the log-likelihood is defined as:

The initialization of the optimization problem is crucial. Two initial points

are proposed:

the Gumbel initial point: in that case, we assume that the GEV is a stationary Gumbel distribution and we deduce

from the empirical mean

and the empirical standard variation

of the data:

and

where

is Euler’s constant; then we take the initial point

. This is the default initial point;

the Static initial point: in that case, we assume that the GEV is stationary and

is the maximum likelihood estimate resulting from that assumption.

The result class produced by the method provides:

the estimator

,

the asymptotic distribution of

,

the parameter functions

,

the normalizing function

,

the optimal log-likelihood value

,

the GEV distribution at time

,

the quantile functions of order

:

.

- getBootstrapSize()¶

Accessor to the bootstrap size.

- Returns:

- sizeint

Size of the bootstrap.

- getClassName()¶

Accessor to the object’s name.

- Returns:

- class_namestr

The object class name (object.__class__.__name__).

- getKnownParameterIndices()¶

Accessor to the known parameters indices.

- Returns:

- indices

Indices Indices of the known parameters.

- indices

- getKnownParameterValues()¶

Accessor to the known parameters values.

- Returns:

- values

Point Values of known parameters.

- values

- getName()¶

Accessor to the object’s name.

- Returns:

- namestr

The name of the object.

- getOptimizationAlgorithm()¶

Accessor to the solver.

- Returns:

- solver

OptimizationAlgorithm The solver used for numerical optimization of the moments.

- solver

- hasName()¶

Test if the object is named.

- Returns:

- hasNamebool

True if the name is not empty.

- setBootstrapSize(bootstrapSize)¶

Accessor to the bootstrap size.

- Parameters:

- sizeint

The size of the bootstrap.

- setKnownParameter(values, positions)¶

Accessor to the known parameters.

- Parameters:

- valuessequence of float

Values of known parameters.

- positionssequence of int

Indices of known parameters.

Examples

When a subset of the parameter vector is known, the other parameters only have to be estimated from data.

In the following example, we consider a sample and want to fit a

Betadistribution. We assume that theand

parameters are known beforehand. In this case, we set the third parameter (at index 2) to -1 and the fourth parameter (at index 3) to 1.

>>> import openturns as ot >>> ot.RandomGenerator.SetSeed(0) >>> distribution = ot.Beta(2.3, 2.2, -1.0, 1.0) >>> sample = distribution.getSample(10) >>> factory = ot.BetaFactory() >>> # set (a,b) out of (r, t, a, b) >>> factory.setKnownParameter([-1.0, 1.0], [2, 3]) >>> inf_distribution = factory.build(sample)

- setName(name)¶

Accessor to the object’s name.

- Parameters:

- namestr

The name of the object.

- setOptimizationAlgorithm(solver)¶

Accessor to the solver.

- Parameters:

- solver

OptimizationAlgorithm The solver used for numerical optimization of the moments.

- solver

OpenTURNS

OpenTURNS