ParametricFunction¶

- class ParametricFunction(*args)¶

Parametric function.

- Available constructor:

ParametricFunction(function, indices, referencePoint, parametersSet)

It defines a parametric function from function by freezing the variables marked by the indices set to the values of referencePoint.

- Parameters:

- function

Function Function with full parameters from which the parametric function is built.

- indicessequence of int

Indices of the frozen variables.

- referencePointsequence of float

Values of the frozen variables. Must be of size of indices if parametersSet is True (default), else its size should be the complementary size of indices.

- parametersSetbool

If True (default), the frozen variables are the ones referenced in indices. Otherwise, the set variables are the ones referenced in the complementary set of indices.

- function

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x', 'y', 'z'], ['x+y', 'x*z+y']) >>> print(f) [x,y,z]->[x+y,x*z+y]

Then create another function by setting x=2 and y=3:

>>> g=ot.ParametricFunction(f, [0,1], [2,3]) >>> print(g) ParametricEvaluation([x,y,z]->[x+y,x*z+y], parameters positions=[0,1], parameters=[x : 2, y : 3], input positions=[2])

Or by setting z=4 using the complementary set flag:

>>> g = ot.ParametricFunction(f, [0,1], [4], False) >>> print(g.getInputDescription()) [x,y] >>> print(g) ParametricEvaluation([x,y,z]->[x+y,x*z+y], parameters positions=[2], parameters=[z : 4], input positions=[0,1])

Methods

__call__(*args)Call self as a function.

draw(*args)Draw the output of function as a

Graph.Accessor to the number of times the function has been called.

Accessor to the object's name.

Accessor to the description of the inputs and outputs.

Accessor to the evaluation function.

Accessor to the number of times the function has been called.

Accessor to the gradient function.

Accessor to the number of times the gradient of the function has been called.

Accessor to the hessian function.

Accessor to the number of times the hessian of the function has been called.

getId()Accessor to the object's id.

Accessor to the underlying implementation.

Accessor to the description of the input vector.

Accessor to the dimension of the input vector.

getMarginal(*args)Accessor to marginal.

getName()Accessor to the object's name.

Accessor to the description of the output vector.

Accessor to the number of the outputs.

Accessor to the parameter values.

Accessor to the parameter description.

Accessor to the dimension of the parameter.

gradient(inP)Return the Jacobian transposed matrix of the function at a point.

hessian(inP)Return the hessian of the function at a point.

isLinear()Accessor to the linearity of the function.

isLinearlyDependent(index)Accessor to the linearity of the function with regard to a specific variable.

parameterGradient(inP)Accessor to the gradient against the parameter.

setDescription(description)Accessor to the description of the inputs and outputs.

setEvaluation(evaluation)Accessor to the evaluation function.

setGradient(gradient)Accessor to the gradient function.

setHessian(hessian)Accessor to the hessian function.

setInputDescription(inputDescription)Accessor to the description of the input vector.

setName(name)Accessor to the object's name.

setOutputDescription(inputDescription)Accessor to the description of the output vector.

setParameter(parameter)Accessor to the parameter values.

setParameterDescription(description)Accessor to the parameter description.

- __init__(*args)¶

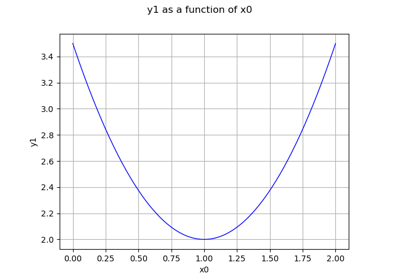

- draw(*args)¶

Draw the output of function as a

Graph.- Available usages:

draw(inputMarg, outputMarg, centralPoint, xiMin, xiMax, ptNb)

draw(firstInputMarg, secondInputMarg, outputMarg, centralPoint, xiMin_xjMin, xiMax_xjMax, ptNbs)

draw(xiMin, xiMax, ptNb)

draw(xiMin_xjMin, xiMax_xjMax, ptNbs)

- Parameters:

- outputMarg, inputMargint,

outputMarg is the index of the marginal to draw as a function of the marginal with index inputMarg.

- firstInputMarg, secondInputMargint,

In the 2D case, the marginal outputMarg is drawn as a function of the two marginals with indexes firstInputMarg and secondInputMarg.

- centralPointsequence of float

Central point with dimension equal to the input dimension of the function.

- xiMin, xiMaxfloat

Define the interval where the curve is plotted.

- xiMin_xjMin, xiMax_xjMaxsequence of float of dimension 2.

In the 2D case, define the intervals where the curves are plotted.

- ptNbint

or list of ints of dimension 2

The number of points to draw the curves.

- outputMarg, inputMargint,

Notes

We note

where

and

, with

and

.

In the first usage:

Draws graph of the given 1D outputMarg marginal

as a function of the given 1D inputMarg marginal with respect to the variation of

in the interval

, when all the other components of

are fixed to the corresponding components of the centralPoint

. Then OpenTURNS draws the graph:

for any

where

is defined by the equation:

In the second usage:

Draws the iso-curves of the given outputMarg marginal

as a function of the given 2D firstInputMarg and secondInputMarg marginals with respect to the variation of

in the interval

, when all the other components of

are fixed to the corresponding components of the centralPoint

. Then OpenTURNS draws the graph:

for any

where

is defined by the equation:

In the third usage:

The same as the first usage but only for function

.

In the fourth usage:

The same as the second usage but only for function

.

Examples

>>> import openturns as ot >>> from openturns.viewer import View >>> f = ot.SymbolicFunction('x', 'sin(2*pi_*x)*exp(-x^2/2)') >>> graph = f.draw(-1.2, 1.2, 100) >>> View(graph).show()

- getCallsNumber()¶

Accessor to the number of times the function has been called.

- Returns:

- calls_numberint

Integer that counts the number of times the function has been called since its creation.

- getClassName()¶

Accessor to the object’s name.

- Returns:

- class_namestr

The object class name (object.__class__.__name__).

- getDescription()¶

Accessor to the description of the inputs and outputs.

- Returns:

- description

Description Description of the inputs and the outputs.

- description

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getDescription()) [x1,x2,y0]

- getEvaluation()¶

Accessor to the evaluation function.

- Returns:

- function

EvaluationImplementation The evaluation function.

- function

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getEvaluation()) [x1,x2]->[2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6]

- getEvaluationCallsNumber()¶

Accessor to the number of times the function has been called.

- Returns:

- evaluation_calls_numberint

Integer that counts the number of times the function has been called since its creation.

- getGradient()¶

Accessor to the gradient function.

- Returns:

- gradient

GradientImplementation The gradient function.

- gradient

- getGradientCallsNumber()¶

Accessor to the number of times the gradient of the function has been called.

- Returns:

- gradient_calls_numberint

Integer that counts the number of times the gradient of the Function has been called since its creation. Note that if the gradient is implemented by a finite difference method, the gradient calls number is equal to 0 and the different calls are counted in the evaluation calls number.

- getHessian()¶

Accessor to the hessian function.

- Returns:

- hessian

HessianImplementation The hessian function.

- hessian

- getHessianCallsNumber()¶

Accessor to the number of times the hessian of the function has been called.

- Returns:

- hessian_calls_numberint

Integer that counts the number of times the hessian of the Function has been called since its creation. Note that if the hessian is implemented by a finite difference method, the hessian calls number is equal to 0 and the different calls are counted in the evaluation calls number.

- getId()¶

Accessor to the object’s id.

- Returns:

- idint

Internal unique identifier.

- getImplementation()¶

Accessor to the underlying implementation.

- Returns:

- implImplementation

A copy of the underlying implementation object.

- getInputDescription()¶

Accessor to the description of the input vector.

- Returns:

- description

Description Description of the input vector.

- description

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getInputDescription()) [x1,x2]

- getInputDimension()¶

Accessor to the dimension of the input vector.

- Returns:

- inputDimint

Dimension of the input vector

.

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getInputDimension()) 2

- getMarginal(*args)¶

Accessor to marginal.

- Parameters:

- indicesint or list of ints

Set of indices for which the marginal is extracted.

- Returns:

- marginal

Function Function corresponding to either

or

, with

and

.

- marginal

- getName()¶

Accessor to the object’s name.

- Returns:

- namestr

The name of the object.

- getOutputDescription()¶

Accessor to the description of the output vector.

- Returns:

- description

Description Description of the output vector.

- description

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getOutputDescription()) [y0]

- getOutputDimension()¶

Accessor to the number of the outputs.

- Returns:

- number_outputsint

Dimension of the output vector

.

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getOutputDimension()) 1

- getParameterDescription()¶

Accessor to the parameter description.

- Returns:

- parameter

Description The parameter description.

- parameter

- getParameterDimension()¶

Accessor to the dimension of the parameter.

- Returns:

- parameterDimensionint

Dimension of the parameter.

- gradient(inP)¶

Return the Jacobian transposed matrix of the function at a point.

- Parameters:

- pointsequence of float

Point where the Jacobian transposed matrix is calculated.

- Returns:

- gradient

Matrix The Jacobian transposed matrix of the function at point.

- gradient

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6','x1 + x2']) >>> print(f.gradient([3.14, 4])) [[ 13.5345 1 ] [ 4.00001 1 ]]

- hessian(inP)¶

Return the hessian of the function at a point.

- Parameters:

- pointsequence of float

Point where the hessian of the function is calculated.

- Returns:

- hessian

SymmetricTensor Hessian of the function at point.

- hessian

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6','x1 + x2']) >>> print(f.hessian([3.14, 4])) sheet #0 [[ 20 -0.00637061 ] [ -0.00637061 0 ]] sheet #1 [[ 0 0 ] [ 0 0 ]]

- isLinear()¶

Accessor to the linearity of the function.

- Returns:

- linearbool

True if the function is linear, False otherwise.

- isLinearlyDependent(index)¶

Accessor to the linearity of the function with regard to a specific variable.

- Parameters:

- indexint

The index of the variable with regard to which linearity is evaluated.

- Returns:

- linearbool

True if the function is linearly dependent on the specified variable, False otherwise.

- parameterGradient(inP)¶

Accessor to the gradient against the parameter.

- Returns:

- gradient

Matrix The gradient.

- gradient

- setDescription(description)¶

Accessor to the description of the inputs and outputs.

- Parameters:

- descriptionsequence of str

Description of the inputs and the outputs.

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> print(f.getDescription()) [x1,x2,y0] >>> f.setDescription(['a','b','y']) >>> print(f.getDescription()) [a,b,y]

- setEvaluation(evaluation)¶

Accessor to the evaluation function.

- Parameters:

- function

EvaluationImplementation The evaluation function.

- function

- setGradient(gradient)¶

Accessor to the gradient function.

- Parameters:

- gradient_function

GradientImplementation The gradient function.

- gradient_function

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> f.setGradient(ot.CenteredFiniteDifferenceGradient( ... ot.ResourceMap.GetAsScalar('CenteredFiniteDifferenceGradient-DefaultEpsilon'), ... f.getEvaluation()))

- setHessian(hessian)¶

Accessor to the hessian function.

- Parameters:

- hessian_function

HessianImplementation The hessian function.

- hessian_function

Examples

>>> import openturns as ot >>> f = ot.SymbolicFunction(['x1', 'x2'], ... ['2 * x1^2 + x1 + 8 * x2 + 4 * cos(x1) * x2 + 6']) >>> f.setHessian(ot.CenteredFiniteDifferenceHessian( ... ot.ResourceMap.GetAsScalar('CenteredFiniteDifferenceHessian-DefaultEpsilon'), ... f.getEvaluation()))

- setInputDescription(inputDescription)¶

Accessor to the description of the input vector.

- Parameters:

- description

Description Description of the input vector.

- description

- setName(name)¶

Accessor to the object’s name.

- Parameters:

- namestr

The name of the object.

- setOutputDescription(inputDescription)¶

Accessor to the description of the output vector.

- Parameters:

- description

Description Description of the output vector.

- description

- setParameter(parameter)¶

Accessor to the parameter values.

- Parameters:

- parametersequence of float

The parameter values.

- setParameterDescription(description)¶

Accessor to the parameter description.

- Parameters:

- parameter

Description The parameter description.

- parameter

Examples using the class¶

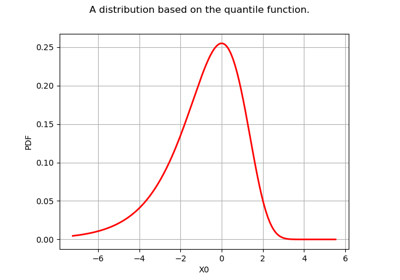

Create your own distribution given its quantile function

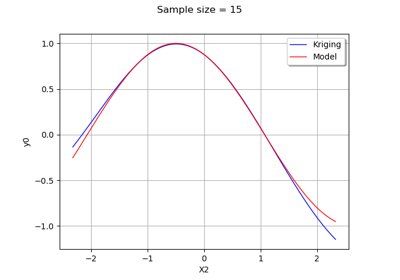

Create a polynomial chaos for the Ishigami function: a quick start guide to polynomial chaos

Evaluate the mean of a random vector by simulations

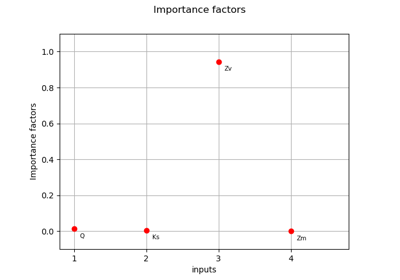

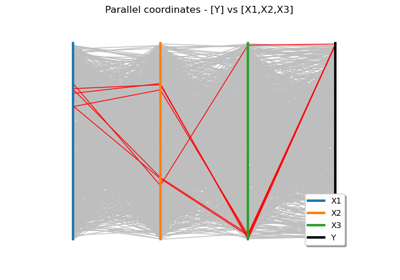

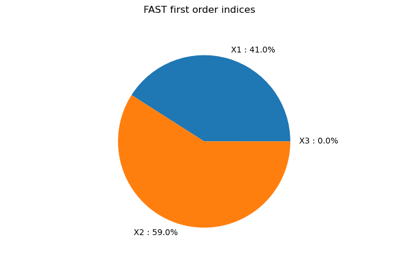

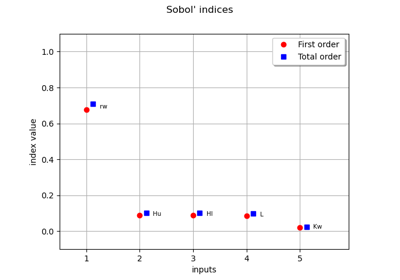

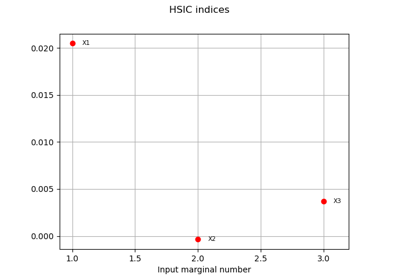

Example of sensitivity analyses on the wing weight model

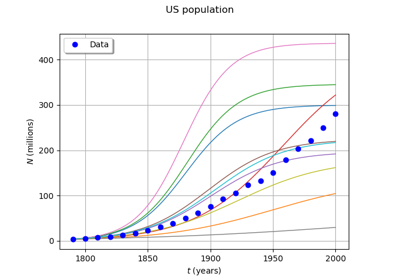

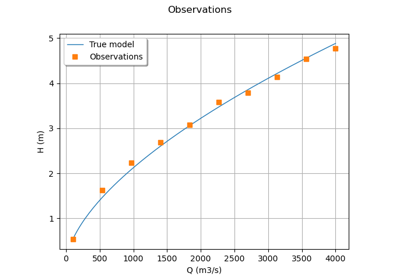

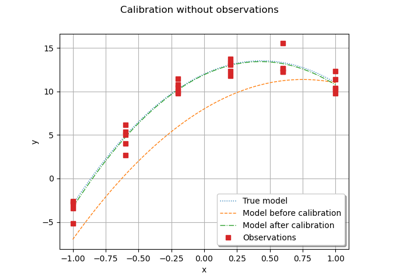

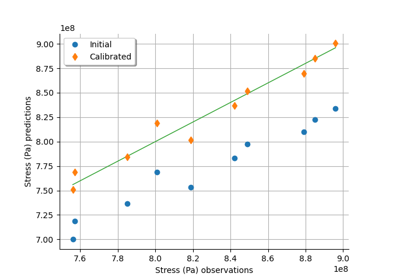

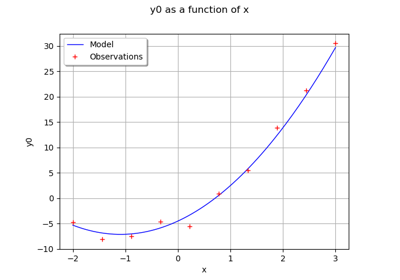

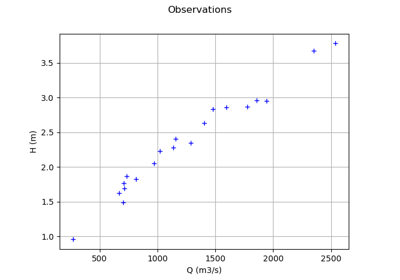

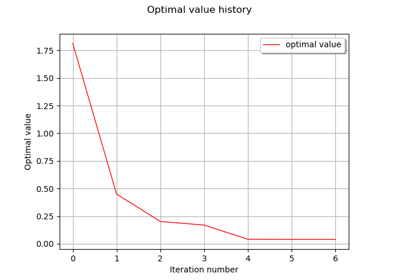

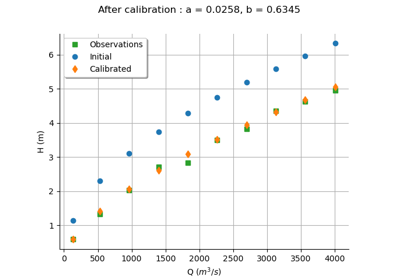

Calibrate a parametric model: a quick-start guide to calibration

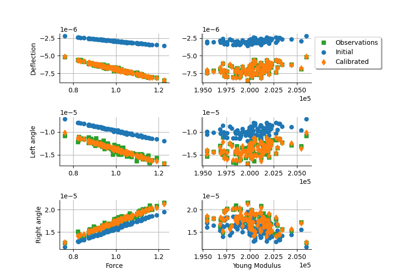

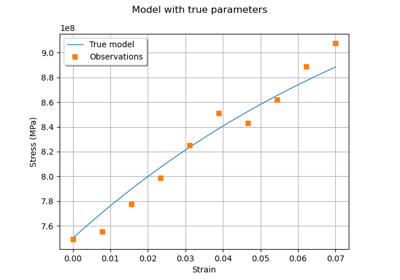

Generate observations of the Chaboche mechanical model

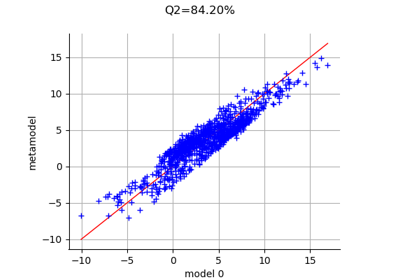

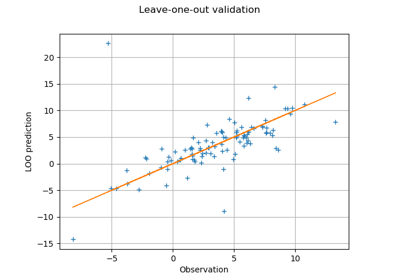

Compute leave-one-out error of a polynomial chaos expansion

OpenTURNS

OpenTURNS